Taking expectations on the computer using a Markov chain¶

In the Bellman equation and in the Euler equation we have the expectation operator. This expectation is taken over the value of \(\varepsilon'\), which will imply \(Z'\). Put differently, we are taking expectations of \(Z'\) conditional on the current \(Z\). An expectation is an integral and an integral can be thought of as a the limit of a Riemann sum as the number of rectangles (or other shapes) goes to infinity. On the computer we will use the same idea as a Riemann sum, just a finite number of them.

- So suppose we have a distribution over \(x\in\mathcal{X}\) with pdf \(p\) and CDF \(P\). To take the expectation of \(F(x)\) we will choose a set of quadrature points \(X \in \mathcal{X}\) and we will construct some quadrature weights \(Q\) that represent the probability associated with \(X\). Suppose we have \(J\) points in \(X\) and \(Q\). Our expectation of \(F(x)\) is then given by

- \[\mathbb E \left[ F(x) \right] = \int_{x\in\mathcal{X}} F(x) p(x) dx \approx \sum_{j=1}^J F(X_j) Q_j\]

There are many ways we could choose the quadrature points and weights. We will use a method that is particularly conveneint for our purposes where we are interested in taking expectations of a stochastic process that follows an AR(1) process. This is the Tauchen (1986) method for discretizing an AR(1).

- By repeated substitution the random variable \(Z_t\) can be considered as an infinite sum

- \[Z_t = \sum_{\tau = -\infty}^t\rho^{t-\tau} \varepsilon_\tau.\]

- As the weighted sum of normally distributed variables is it self normal we have that the unconditional distribution of \(Z_t\) is normal with mean given by

- \[\sum_{\tau = -\infty}^t\rho^{t-\tau} \mathbb E \left[ \varepsilon_\tau \right] = 0.\]

- and variance given by

- \[\sum_{\tau = -\infty}^t\rho^{2(t-\tau)} \sigma^2 = \frac{\sigma^2}{1-\rho^2}.\]

We will put an evenly-spaced grid on \(Z_t\) on the interval that is centered at zero and spans two standard deviations of its unconditional distribution in each direction. For each \(Z_i\) in this grid, we will form the conditional expectation over \(Z'\) by using a set of quadrature points for \(\varepsilon'\) such that the resulting values for \(Z' = \rho Z_i + \varepsilon'\) are exactly the points in our grid on \(Z\). So for each \(Z_i\) we have a probability of moving to the other discrete values in the grid \(Z_j\). The result is a Markov chain that approximates the continuous AR(1) process.

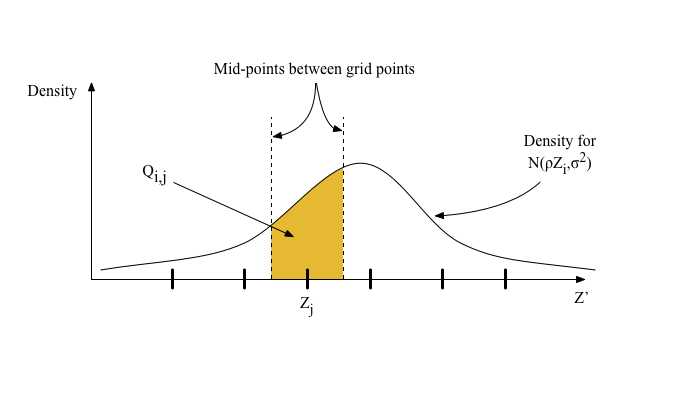

To create the transition probabilities for the Markov chain, the Tauchen method simply assigns the probability mass in an interval between two grid points to the nearer of the two grid points. The following diagram shows how the Tauchen method creates the transition probability \(Q_{i,j}\) of moving from \(Z_i\) to \(Z_j\).

I have chosen to present the Tauchen method because it is easy to understand. There are other similar methods that have been shown to be more accurate and reliable.

- Once we have the Markov chain approximation to the AR(1), taking a conditional expectation of a function of \(Z'\) conditional on \(Z\) is very easy. First, let’s store the transition probabilities in a transition matrix for which the columns correspond to the current state \(Z\) and for which the rows correspond to the future state \(Z'\)

- \[\begin{split}Q \equiv \left( \begin{array}{c c c c} Q_{1,1} & Q_{2,1} & \cdots Q_{J,1} \\ Q_{1,2} & Q_{2,2} & \cdots Q_{J,2} \\ \vdots & \vdots & \ddots & \vdots \\ Q_{1,J} & Q_{2,J} & \cdots Q_{J,J} \end{array} \right).\end{split}\]

- Second, we compute the value of the function at each grid point for \(Z\). Let’s store that in a row vector \(Y\)

- \[\begin{split}Y \equiv \left( \begin{array}{c c c c} F(Z_1) & F(Z_2) & \cdots & F(Z_J) \end{array} \right).\end{split}\]

Then if we take the matrix product \(Y Q\) we have a new row vector whose elements are \(\mathbb E \left[ F(Z') | Z_i \right]\).